Taking a step beyond visualization

In our blog on pervasive sensing, we described how reliability teams in the past two decades have adopted a predictive maintenance strategy that is driven by live asset data streaming from a variety of edge sensors. This near real-time telemetry is routed via network into a central data repository for visualization and analysis. Turns out, the visualization is easy. Analysis is another story.

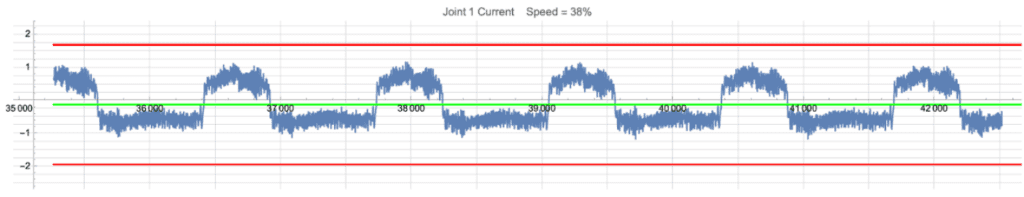

To see why, let’s take a simple example of an industrial cobot running a pick-and-place motion profile at 38% speed. Here is the current profile (in amps) of one of the joints.

Suppose you are a reliability engineer, and you see these repetitive surges as the cobot finds the item, pulls it, places it, and returns to its home position. You know that a warning sign for the cobot joint would be shown as anomalies such as spikes in current (indicating possible internal mechanical wear) or a steady growth in current (indicating increased friction and temperature of the joint).

Your first thought is to create an anomaly detection system based on a statistical model to identify these types of deviations. It seems straightforward: compute the mean value of the signal and wrap it within a threshold of, say, 3 standard deviations as shown below. The mean is green and the red shows three standard deviations above and below the mean.

Here’s where the problem comes in. Suppose your production manager wants to speed up the robot to 66%. Here is what will happen.

Your statistical model at 38% will start throwing anomaly alerts immediately. The model is invalid at the faster speed. To rectify this, you create a second statistical model for the 66% speed, with its own mean and standard deviation. Now you have two models, one for 38% speed and one for 66% speed. One week later, the production manager increases the speed to 90% and the anomaly alerts start again.

You can see where this is going. Desiring a single model that will support all of the desired speeds of the cobot, you calculate means and standard deviations at several speeds and create a regression model that dials-up the correct mean and standard deviation at each speed.

Now, suppose the weight of the object being picked and placed increases by say 1 kg. Your regression model is again invalid. So…does there need to be a separate regression model computed for each load that might be encountered by the cobot? Now, suppose the motion profile itself must change depending on the part being produced.

Again, any existing regression models will be invalid with the new motion profile. Also, this cobot has 6 joints, and each joint will need its own model. Looking across the factory floor, you realize that your process has 40 cobots operating. The number of individualized regression models needed for this predictive maintenance strategy is now into the hundreds and possibly thousands.

This example was for a cobot. Generally speaking, cobot motions produce telemetry that is uniform and repeatable. However, high-value rotational assets such as turbines, pumps, and CNC machines have much more complex telemetry relationships that are affected by many hidden variables and don’t lend themselves to regression analysis. This is particularly true for vibrational telemetry, which for rotational assets, is the most useful condition monitoring measurement.

Let me make one final point about why statistical models are unsuitable for production-scale predictive maintenance strategies. Regression analysis assumes that there is one (or several) nearly-independent variables that can predict the value of several dependent variables. Our experience with many customer data sets from complex assets is that this linkage is extremely weak, if there at all.

Much more common is asset measurements such as temperature, speed, pressure, and vibration are only relationally connected in their n-dimensional space rather than connected in an input-output functional way. That is to say, they arrange themselves in clusters based on the different production modes of the asset.

Often hundreds of clusters. These clusters capture all of the complex relationships between asset sensor measurements of a compliant asset in its various production modes and indicate when those relationships are starting to stretch outside of normal operation.

Amber

Amber is the only predictive maintenance technology that can capture the complex multi-variable relationships of high-value assets and turn those into actionable numerical scores.

Amber models are based on sensor relationships: Amber builds a high-dimensional unsupervised machine learning model capturing all of the complex relationships between asset sensors (known as sensor fusion), often involving hundreds of clusters.

Amber is self-tuning: Amber starts with a blank slate and uses either live asset data or historian data to automatically find the clusters that describe compliant asset operation for that asset’s usage pattern and current maintenance history. This also means that Amber is scalable. Connecting it to 100 assets so it can train 100 individualized models is no more difficult than connecting it to one. (See our blog about the problems with universal models).

Amber is edge or cloud deployable: Amber can train and run either on-prem or in the cloud. Amber’s core segmentation algorithm is so efficient that it can train very complex unsupervised machine learning models on-site using commodity-off-the-shelf computers, without GPU or cloud support.

Amber outputs easy-to-understand, real-time, predictive scores: Although Amber’s unsupervised ML models are very complex, Amber boils down the health status of your asset to just a few simple numerical measurements including the Anomaly Index (from 0 to 1000) and the Amber Warning Level (0 = compliant, 1 = asset is changing, 2 = asset is critical).